Cache

- RFC PR: datafuselabs/databend#6799

- Tracking Issue: datafuselabs/databend#6786

Summary

Add cache support for Databend Query so that our users can load hot data from our cache services instead of reading from backend storage services again.

Motivation

Databend design is based on the separation of storage and computing:

As a result, databend will support different cloud storage services as its backend like AWS s3, azure blob, and google cloud storage. The advantage of this is that we can get very high throughput bandwidth, but the disadvantage is that the latency of our individual requests increases.

Adding a caching layer will improve the overall latency and reduce the number of unnecessary requests, thus reducing costs.

Databend used to have a local disk-based cache at common-cache. This implementation use databend-query's local path to cache blocks.

Guide-level explanation

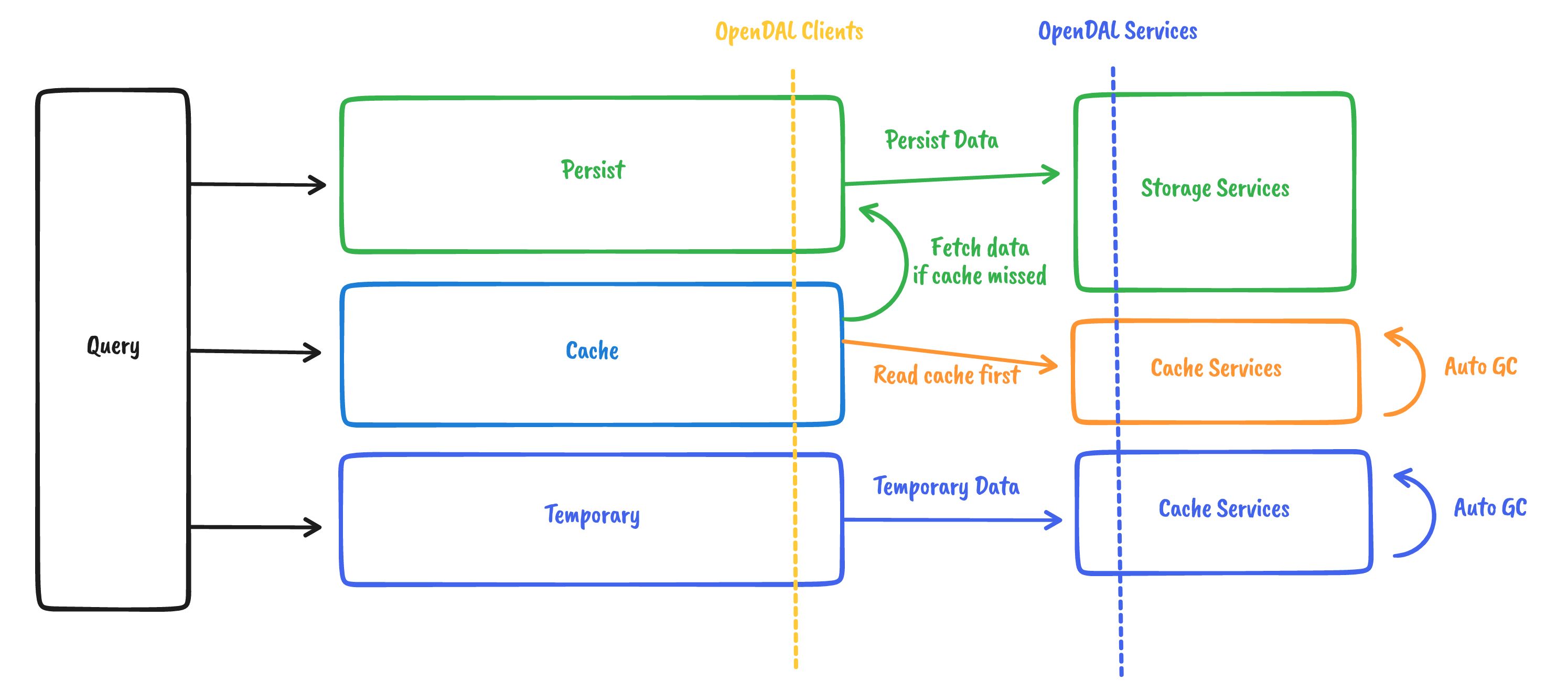

After introducing cache, the databend storage layer will be composed as follows:

The query will maintain three OpenDAL operators:

- Persist Operator: Read and write persist data from/into storage services directly.

- Cache Operator: Read and write data via transparent caching.

- Temporary Operator: Read and write temporary data from/into cache services.

Storage services and cache services we mentioned here are both OpenDAL-supported services. The difference is cache services' backends could have their GC or background auto eviction logic, which means cache services is non-persist, a.k.a, volatile.

The query should never try to write persisted data to Cache Operator or Temporary Operator.

For End Users

Users can specify different cache services for caching or storing temporary data. For example, they can cache data into local fs (the same as current behavior):

[cache]

type = "fs"

[cache.fs]

data_path = "/var/cache/databend/"

Or they can cache data into Redis:

[cache]

type = "redis"

[cache.redis]

endpoint = "192.168.1.2"

password = "PASSWORD"

Temporary data could be shared crossing the whole cluster, so users must use distributed cache services instead of local fs or memory:

[temporary]

type = "opencache"

[temporary.opencache]

endpoints = ["192.168.1.2"]

For Developers

As discussed before, developers now have three kinds of Operator to handle different workloads:

Persist: Read and write data directly without caching logic, similar toO_DIRECT.Cache: Read and write via transparent caching, similar to the kernel's page cache.Temporary: Read and write temporary data to cache services, similar to Linux'stmpfs.

Reference-level explanation

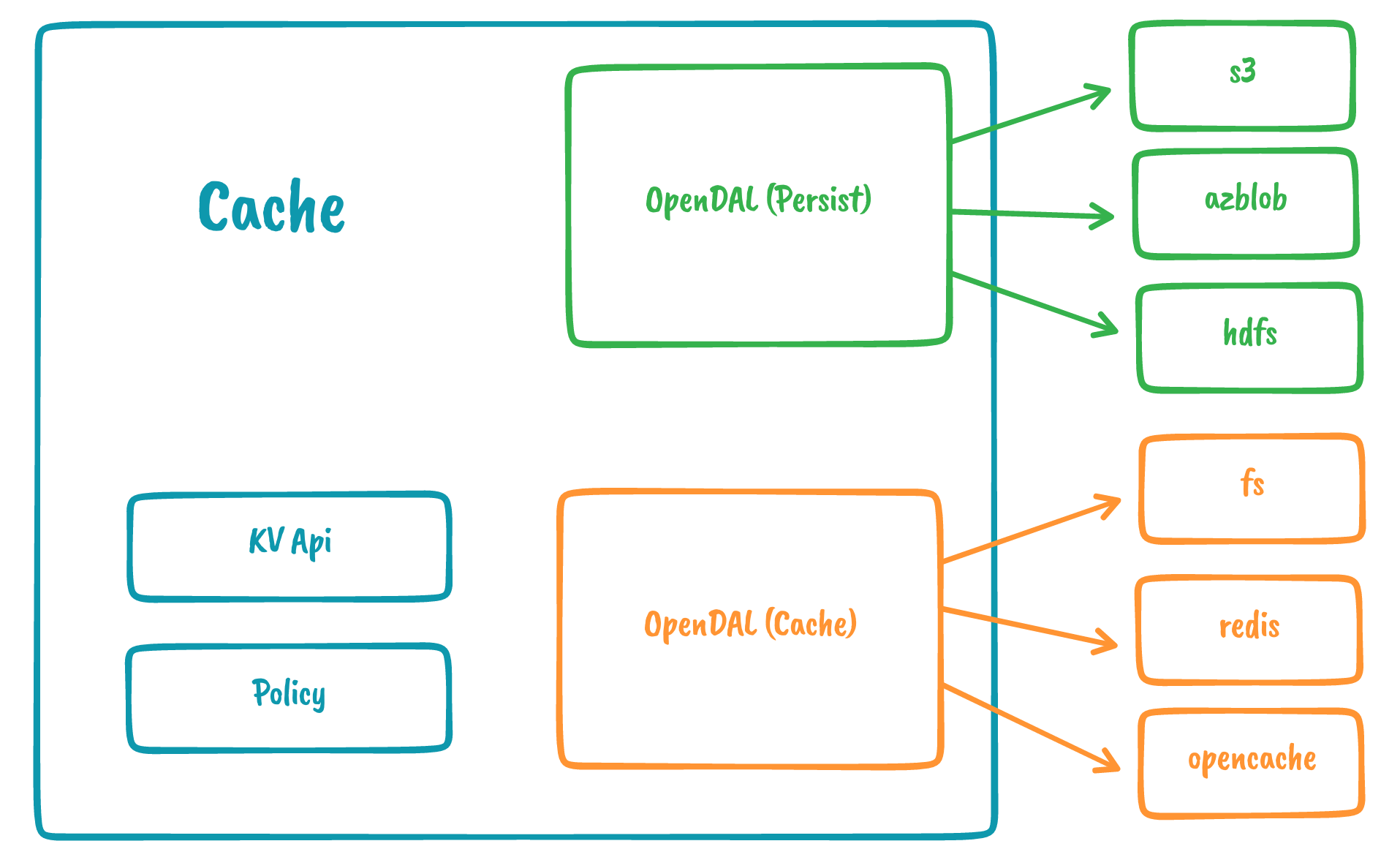

Cache Operator

Cache Operator will be implemented as an OpenDAL Layer:

#[derive(Clone, Default, Debug)]

pub struct CacheLayer {

// Operator for persist data (via `storage` config)

persist: Operator,

// Operator for caching data (via `cache` config)

cache: Operator,

// Client for KVApi

kv: Arc<dyn KVApi>,

}

impl Layer for CacheLayer {}

impl Accessor for CacheLayer {}

databend-query will init the cache layer along with persist operator:

pub fn get_cache_operator(&self) -> Operator {

self.get_storage_operator().layer(CacheLayer::new())

}

The detailed implementation of CacheLayer will not discuss in this RFC.

Temporary Operator

The temporary Operator will connect to a distributed cache service that shares the same view for the whole cluster. The query can write temporary intermediate results here to share with other nodes.

Most work should be down at the cache services side. We will not discuss this in this RFC.

Drawbacks

None.

Rationale and alternatives

None.

Prior art

None.

Unresolved questions

None.

Future possibilities

OpenCache

OpenCache is an ongoing work from databend community to build a distributed cache services. OpenDAL will implement native support for OpenCache once its API is stable. After that, users can deploy and use OpenCache as cache or temporary storage.